NVIDIA'S NEW NIGHTMARE IS SMALLER THAN THIS PERIOD.

(AMD), (NVDA), (GOOG), (MSFT), (META), (AMZN)

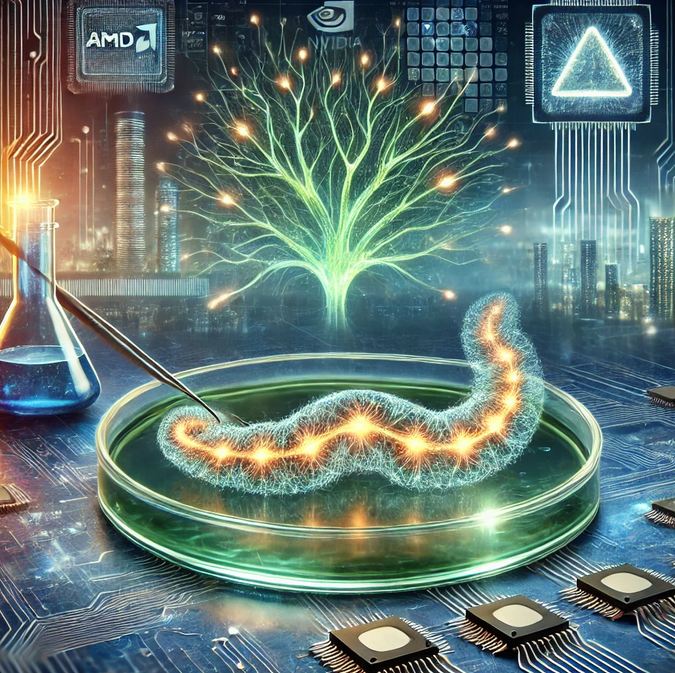

I was at MIT last month, watching a tiny roundworm wiggle around in a petri dish, when one of the researchers dropped a bombshell: "This creature," he said, pointing to something smaller than a period, "just helped us raise $250 million from AMD."

Welcome to the strange world of Liquid AI, where a worm with 302 neurons is teaching Silicon Valley how to build better artificial brains.

And by better, I mean cheaper, faster, and far more efficient.

Here's why I’m thrilled about this discovery: the AI industry has a dirty little secret. These massive language models everyone's obsessing over? They're burning through cash faster than a Tesla (TSLA) on ludicrous mode.

GPT-4 reportedly cost over $100 million just to train. The electricity bill alone would make your eyes water.

But Liquid AI has found a different path. By studying how that little worm processes information, they've created neural networks that slash computing costs by 90% while matching the performance of the big boys.

No wonder AMD (AMD) just wrote them a check that pushed their valuation north of $2 billion.

For AMD, this isn't just another tech bet. They're gunning for Nvidia (NVDA), who currently owns 80% of the AI chip market.

Last week, I spoke with some engineers at AMD who couldn't stop grinning about their new processors. They're talking about 5-10x better performance per watt compared to current GPUs.

With Nvidia reporting $18.1 billion in data center revenue for Q4 2023, you can see why AMD is excited.

The timing couldn't be perfect. Companies are getting tired of building massive GPU farms that consume enough power to light up Las Vegas.

Google's (GOOG) DeepMind is sweating bullets. Microsoft (MSFT) is scrambling to get this technology into Azure.

Intel (INTC) is still trying to figure out which end is up. And Meta (META)? They're too busy building digital playgrounds to notice.

Even Amazon's (AMZN) AWS, which powers about 40% of the world's large language models, sees the writing on the wall.

They've already poured $100 million into AI optimization research. When Amazon starts writing checks that big, you know something's coming.

Just yesterday, a hedge fund manager friend called me and asked if I'd lost my mind getting excited about a worm-inspired AI company.

I reminded him that in 2002, Sydney Brenner won the Nobel Prize for mapping that same worm's nervous system.

Back then, nobody imagined this research would spawn a multi-billion dollar AI company. But that's how innovation works - the biggest breakthroughs often come from the smallest places.

For those looking into the AI sector, the signals are clear. While Liquid AI remains private, keep your eyes on AMD, Microsoft, and Amazon.

They're positioning themselves for the next big shift in AI. When the efficiency revolution hits quarterly earnings reports, you'll want to be ahead of the curve.

What fascinates me most isn't just the technology - it's the irony. In an industry obsessed with building bigger, more powerful systems, the solution to AI's biggest problems might come from studying one of the simplest nervous systems in nature.

And I know the tech bears keep telling us AI valuations are too high, the bubble's about to pop, head for the hills. But they're missing the point.

The rules have changed. This is not just about building bigger AI anymore - it's about building smarter AI. And sometimes, as our tiny worm friend shows us, smarter means smaller.

Just don't tell that to Nvidia yet. Let them figure it out when their next power bill arrives.